Courses in Foundational Math

- Solving Equations

- Measurement

- Mathematical Fundamentals

- Geometry I

- Reasoning with Algebra

- Functions and Quadratics

Log in

Log in

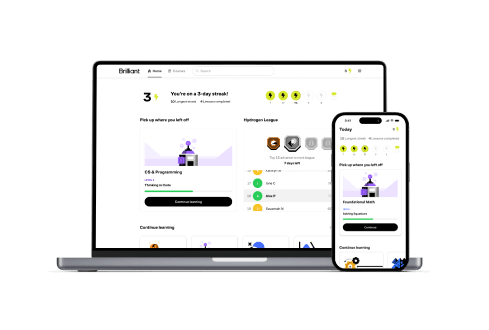

Whether you’re a complete beginner or ready to dive into machine learning and beyond, Brilliant makes it easy to level up fast with fun, bite-sized lessons.

Visual, interactive lessons make concepts feel intuitive — so even complex ideas just click. Our real-time feedback and simple explanations make learning efficient.

Students and professionals alike can hone dormant skills or learn new ones. Progress through lessons and challenges tailored to your level. Designed for ages 13 to 113.

We make it easy to stay on track, see your progress, and build your problem solving skills one concept at a time.

Form a real learning habit with fun content that’s always well-paced, game-like progress tracking, and friendly reminders.

All of our courses are crafted by award-winning teachers, researchers, and professionals from MIT, Caltech, Duke, Microsoft, Google, and more.