Prisoner's Dilemma

The prisoner's dilemma is a problem in game theory in which two competing players end up in a worse situation because they assume the other one won't cooperate.

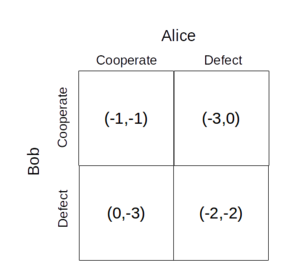

The police have captured two criminals and are interrogating them in separate rooms, so they can't communicate with each other. They offer each the following deal:

- If Alice snitches on Bob, Alice goes free and Bob spends three years in jail.

- Similarly, if Bob snitches on Alice, Bob goes free and Alice spends three years in jail.

- If neither of them snitch on each other, then they both spend one year in jail.

- If they both snitch on each other, then they both spend two years in jail.

The prisoner's dilemma has been used to model many real-world applications, such as nuclear war, the evolution of reciprocal altruism, and the tragedy of the commons.

Contents

Nash Equilbrium and Pareto Efficiency

In order to determine the outcome of the game, we need a way to describe how each player makes decisions. Each player picks the action that will get them the highest payoff assuming that the other player's action is fixed. This is ideal for describing the prisoner's dilemma since each prisoner must make their decision without communicating with the other.

Bob reasons like this:

- If Alice decides to cooperate, then I spend 1 year in jail if I cooperate and 0 if I defect, so I should defect.

- If Alice decides to defect, then I spend 3 years in jail if I cooperate and 2 if I defect, so I should defect.

Alice also reasons the same way. This situation is a Nash equilibrium. It's optimal to defect, as under either situation the optimal solution for "me" is to defect.

However, this equilibrium doesn't capture the notion of the “best” outcome. Both players cooperating is better for both players. The Pareto efficient outcome is the best

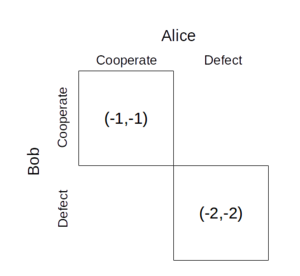

How many Nash equilibria does the following game have? Assume that we restrict ourselves to pure strategies (that is, each agent can only pick exactly one strategy).

"Superrationality" and Newcomb's Problem

Since the outcome is better for both players if they both cooperate than if they both defect, many people have tried to develop theories of decision making that ensure the players will cooperate.

Douglas Hofstadter proposed a solution known as "superrationality." Superrationality assumes that the player is playing against a copy of themselves. If they are truly a copy, then it is impossible for one player to cooperate while the other defects. The only choice is between both cooperating and both defecting, and both cooperating is the best.

This type of thinking is the basis of timeless decision theory, an alternative to the standard form of decision making described aboved [1]. In timeless decision theory, instead of choosing an action assuming that everyone else's actions are fixed, the agent chooses an action assuming that all other agents identical to it must choose the same action. This thinking has also been used to solve Newcomb's paradox. In that problem, a robot that can predict your actions gives you two boxes, which are filled with money depending on what the robot predicts you will do. If the robot can predict your actions perfectly, that's the same as you playing against a copy of yourself. This setup is the same as the superrational prisoner's dilemma.

Iterated Prisoner's Dilemma

In the standard prisoner's dilemma, the players only play against each other once. However, in real-life games, players will generally play against each other multiple times. They need to take into account what the other player will do in future rounds based on what happens in this round. For instance, you may think it is rational to steal from

In the case where both players know there are exactly \(N\) rounds, the rational solution can be found using K-level thinking. Consider the \(N^{th}\) round. Player 1 reasons that since there are no more rounds in the future, there is no reason to cooperate, so she defects. Similarly, player 2 reasons that he should defect. But on the \((N-1)^{th}\) round the reasoning is the same. They both know that they will both defect on the \(N^{th}\) round anyway, so there's no reason not to defect on the \((N-1)^{th}\) round. By induction, they both always defect. As before, the rational solution is strictly worse for both players than always cooperating.

This implies that it is easier to get agents to cooperate when they have less information about what the game will look like in the future.

It is extremely difficult to say in a strict way what strategies are best in the iterated prisoner's dilemma, especially because the success of one strategy depends on what strategies other players are using. The best way to figure it out is to write programs (or "Bots") that represent agents using the strategies, and then have them play against each other in a tournament.

The simplest two strategies are: [2]

- CooperateBot: CooperateBot always cooperates

- DefectBot: DefectBot always defects

Since DefectBot always defects, regardless of what you do, then the best option when playing against DefectBot is always to defect. When playing against DefectBot, it might make sense to have a strategy like

- ReasonableBot: ReasonableBot defects against DefectBot, but cooperates otherwise

Unfortunately, this leaves one open to a counter strategy:

- TrollBot: TrollBot cooperates with anyone who cooperates with DefectBot, and defects against anyone who defects against DefectBot

If a bot plays against a pool of 99 DefectBots and 1 TrollBot, then it's still worth it for

The most commonly studied strategy in the iterated prisoner's dilemma is tit-for-tat. A TitForTatBot will cooperate on the first round it plays

In evolutionary biology, the tit-for-tat strategy is used to explain the development of reciprocal altruism in species. Despite the fact that each individual gene is selfish (that is, will always have a higher payoff at any given point by defecting), it can be overall better for organisms to help each other out, so as to avoid the unfavorable equilibrium of forever defecting against each other. In evolutionary iterated prisoner's dilemma program tournaments, each bot is replicated at a rate proportional to the amount of payoff it has received. This mimics biology, in which an animal that successfully consumes another animal is more likely to reproduce than one that doesn't, and even more likely to reproduce than one that spends energy combating another animal.

References

- Yudkowsky, E. Timeless Decision Theory. Retrieved from https://intelligence.org/files/TDT.pdf

- LaVictoire, P. Robust Cooperation in the Prisoner's Dilemma. Retrieved from http://lesswrong.com/lw/hmw/robust_cooperation_in_the_prisoners_dilemma/