Expected Value

In probability theory, an expected value is the theoretical mean value of a numerical experiment over many repetitions of the experiment. Expected value is a measure of central tendency; a value for which the results will tend to. When a probability distribution is normal, a plurality of the outcomes will be close to the expected value.

Any given random variable contains a wealth of information. It can have many (or infinite) possible outcomes, and each outcome could have different likelihood. The expected value is a way to summarize all this information in a single numerical value.

Contents

Definition

If the sample space of a probability experiment contains only numerical outcomes, then a random variable is the variable that represents these outcomes. For example, the result of rolling a fair six-sided die is a random variable that takes each of the values from 1 to 6 with probability \(\frac{1}{6}.\) This is an example of a discrete random variable.

For a discrete random variable, the expected value can be calculated by multiplying each numerical outcome by the probability of that outcome, and then summing those products together. The expected value of a fair six-sided die is calculated as follows:

\[\frac{1}{6}(1) + \frac{1}{6}(2) + \frac{1}{6}(3) + \frac{1}{6}(4) + \frac{1}{6}(5) + \frac{1}{6}(6) = 3.5.\]

Let \(X\) be a discrete random variable. Then the expected value of \(X\), denoted as \(\text{E}[X]\) or \(\mu\), is

\[\text{E}[X]=\mu=\sum\limits_{x}{xP(X=x)}.\]

A stack of cards contains one card labeled with \(1\), two cards labeled with \(2\), three cards labeled with \(3\), and four cards labeled with \(4\). If the stack is shuffled and a card is drawn, what is the expected value of the card drawn?

Let \(X\) be the random variable that represents the value of the card drawn. Then

\[\begin{align} P(X=1)&=\dfrac{1}{10}\\ P(X=2)&=\dfrac{1}{5}\\ P(X=3)&=\dfrac{3}{10}\\ P(X=4)&=\dfrac{2}{5}\\ \Rightarrow \text{E}[X] &=\left(1\times\dfrac{1}{10}\right)+\left(2\times\dfrac{1}{5}\right)+\left(3\times\dfrac{3}{10}\right)+\left(4\times\dfrac{2}{5}\right)\\ &=3. \end{align}\]

The expected value of the card drawn is \(3.\ _\square\)

From a measure theoretic perspective, let (\(\Omega, \mathcal F, P\)) be a measure space. To compute the expectation or integral of general random variables, we must proceed in the following manner: Note: Please visit Axioms of Probability for the definition of a \(\sigma\)-algebra.

Step 1: Expectation, \(\mathbb E \{X\} \) of simple random variables

A random variable, \(X\) is called a simple, if it can be written as \(X = \sum_{i=1} ^{n} a_i \mathbb 1_{A_i} \), such that \(A_i\) form a partition of \(\Omega\), that is, \(\bigcup_{i=1} ^{n} A_{i} = \Omega \) and \(A_{i} \cap A_{j} = \emptyset \forall i \ne j\), with each \(a_{i} = A_{i}\).Now we can define the expectation of a simple random variable as:

\(\mathbb E \{X\} = \sum_{i=1} ^{n} a_i \mathbb P(A_i) \), where \(\mathbb P(A_i)\) is a probability measure and each \(A_i \in \mathcal F\), \( \mathcal F\) is a \(\sigma\) -algebra of subsets of \(\Omega\). \(\mathbb E \{X\}\) is equivalently written as \(\mathbb E \{X\} = \int X(\omega) \mathbb P(d\omega) \) or \(\int X d \mathbb P\)

Since the partitioning of \(\Omega\) has many different representations, we must show that, given any choice of representation, we still wind up with a well-defined notion of expectation.

The definition of \(\mathbb E \{X\}\) for simple random variables is well defined.

Proof:

Let \(X = \sum_{i=1} ^{n} a_i \mathbb 1_{A_i}\) and \(Y = \sum_{i=1} ^{n} b_i \mathbb 1_{B_j}\) be two simple random variables such that \(\bigcup_{i=1} ^{n} A_{i} = \Omega = \bigcup_{j=1} ^{m} B_{j}\) where \(A_{i} \cap A_{l} = \emptyset \forall i \ne l\) and \(B_{j} \cap B_{k} = \emptyset \forall j \ne k\). Note that we can write each \(A_i = A_i \cap \Omega = A_i \cap ( \bigcup_{j=1} ^{m} B_{j}) \) and each \(B_{j} = B_{j} \cap \Omega = B_{j} \cap (\bigcup_{i=1} ^{n} A_{i})\). Thus, we can rewrite our simple function representation for \(X\) as \(X = \sum_{i=1} ^{n} a_i \mathbb 1_{A_i} = \sum_{i=1} ^{n} a_i \mathbb 1_{A_i \cap ( \bigcup_{j=1} ^{m} B_{j})}\). Since all \(A_{i}\) and \(B_{j}\) are disjoint, each \(A_{i} \cap B_{j}\) form a disjoint union of \(\Omega\), thus, \(X = \sum_{i=1} ^{n} a_i \mathbb 1_{A_i \cap ( \bigcup_{j=1} ^{m} B_{j})} = \sum_{i=1} ^{n} \sum_{j=1} ^{m} a_i \mathbb 1_{A_{i} \cap B_{j}}\). We do the same for simple random variable, \(Y = \sum_{j=1} ^{m} \sum_{i=1} ^{n} b_j \mathbb 1_{A_{i} \cap B_{j}}\). Thus, we let \(a_i = b_j\) on all \(A_i \cap B_j \ne \emptyset \) then we see that \(X = \sum_{i=1} ^{n} \sum_{j=1} ^{m} a_i \mathbb 1_{A_{i} \cap B_{j}} =\sum_{j=1} ^{m} \sum_{i=1} ^{n} b_j \mathbb 1_{A_{i} \cap B_{j}} = Y \). Now take the expectation of \(X\) and \(Y\) and we have \(\mathbb E \{X\} = \sum_{i=1} ^{n} \sum_{j=1} ^{m} a_i \mathbb P(A_{i} \cap B_{j}) = \sum_{j=1} ^{m} \sum_{i=1} ^{n} b_j \mathbb P(A_{i} \cap B_{j}) = \mathbb E \{Y\} \) so the definition of expectation on simple random variables is well defined. \(\blacksquare\)

Properties

The below two theorems show how translating or scaling the random variable by a constant changes the expected value. An explanation follows.

For random variable \(X\) and any constant \(c\),

\[\text{E}[X + c] = \text{E}[X] + c.\]

We have

\[\begin{align} \text{E}[X + c] &= \sum_x (x+c) P(X+c=x+c) \\ &= \sum_x xP(X=x) + \sum_x cP(X=x)\\ &= \text{E}[X] + c \sum_x P(X=x)\\ & = \text{E}[X] + c \cdot 1\\ &= \text{E}[X] + c.\ _\square \end{align}\]

For random variable \(X\) and any constant \(c\)

\[ \text{E}[cX] = c \text{E}[X].\]

By the definition of expectation

\[ \begin{align} \text{E}[cX] &= \sum_x cx P(cX=cx)\\ &= c \sum_x x P(X=x)\\ &= c \text{E}[X].\ _\square \end{align}\]

The first theorem shows that translating all variables by a constant also translates the expected value by the same constant. This makes intuitive sense since if all variables are translated by a constant, the central or mean value should also be translated by the constant. The second theorem shows that scaling the values of a random variable by a constant \(c\) also scales the expected value by \(c\).

A fair six-sided die is labeled on each face with the numbers 5 through 10. What is the expected value of the die roll?

From before, we know the expected value of a regular six-sided die is \(\text{E}[X]=3.5\). This problem is asking for \(\text{E}[X+4]\).

Therefore, the expected value of the die roll is \(3.5+4=7.5\). \(_\square\)

A fair six-sided die is labeled on each face with the first six positive multiples of 5. What is the expected value of the die roll?

From before, the expected value of a regular six-sided die roll is \(\text{E}[X]=3.5\). This problem is asking for \(\text{E}[5X]\).

Therefore, the expected value of the die roll is \(3.5\times 5= 17.5\). \(_\square\)

We now show how to calculate the expected value for a sum of random variables.

Let \(X\) and \(Y\) be random variables. Then

\[ \text{E}[X + Y] = \text{E}[X] + \text{E}[Y].\]

Two fair six-sided dice are rolled. What is the expected value of the sum of their rolls?

From before, it is known that the expected value of a single fair six-sided die is \(\text{E}[X]=3.5\). This problem is asking for \(\text{E}[X+X]\).

Therefore, the expected value of the sum of the die rolls is \(3.5+3.5=7\). \(_\square\)

Linearity of Expectation

Main Article: Linearity of Expectation.

The above theorems can be combined to prove the following:

For any random variables \(X_1, X_2, \ldots, X_k\) and constants \(c_1, c_2, \ldots, c_k,\) we have

\[ \text{E} \left[ \sum\limits_{i=1}^k c_i X_i \right] = \sum\limits_{i=1}^k c_i \text{E}[X_i] .\]

This is known as linearity of expectation, and holds even when the random variables \(X_i\) are not independent events.

A fair six-sided die is rolled repeatedly until three sixes are rolled consecutively. What is the expected number of rolls?

Let \(X_n\) be the random variable that represents the number of rolls required to get \(n\) consecutive sixes.

In order to get \(n\) consecutive sixes, there must first be \(n-1\) consecutive sixes. Then, the \(n^\text{th}\) consecutive six will occur on the next roll with \(\frac{1}{6}\) probability, or the process will start over on the next roll with \(\frac{5}{6}\) probability:

\[\text{E}[X_n]=\text{E}\left[\dfrac{1}{6}\left(X_{n-1}+1\right)+\dfrac{5}{6}\left(X_{n-1}+1+X_n\right)\right]=\text{E}\left[X_{n-1}+1+\dfrac{5}{6}X_n\right].\]

By linearity of expectation,

\[\text{E}[X_n]=\text{E}[X_{n-1}]+1+\dfrac{5}{6}\text{E}[X_n].\]

Solving for \(\text{E}[X_n]\) yields

\[\text{E}[X_n]=6\text{E}[X_{n-1}]+6.\]

It can be found that \(\text{E}[X_1]=6\), so \(\text{E}[X_2]=6\times 6+6=42\), and \(\text{E}[X_3]=6\times 42+6=258\).

The expected number of rolls until three consecutive sixes is rolled is \(258\). \(_\square\)

A fair coin is tossed repeatedly until 5 consecutive heads occur. What is the expected number of coin tosses?

Continuous Random Variables

There are two types of random variables, discrete and continuous. The die-roll example from above is an example of a discrete random variable since the variable can take on a finite number of discrete values. Choosing a random real number from the interval \([0,1]\) would be an example of a continuous random variable.

Given a continuous random variable \(X\) and probability density function \(f(x)\), the expected value of \(X\) is defined by

\[ \text{E}[X] = \int_x xf(x) \, dx. \]

Given the probability density function \(f(x)=3x^2\) defined on the interval \([0,1]\), what is \(\text{E}[X]?\)

By the above definition,

\[\begin{align} \text{E}[X] &=\displaystyle \int_0^1{3x^3}\ dx\\ &=\left. \dfrac{3}{4}x^4\ \right|_0^1\\ &=\dfrac{3}{4}.\ _\square \end{align}\]

\[f(x)=\frac{\pi}{14400}x\sin\left(\frac{\pi}{120}x\right),\ \ 0<x<120\]

A life insurance actuary estimates the probabilities of \(X\), a person's life expectancy, with the probability density function as described above.

According to this model, what is \(\big\lfloor \text{E}[X]\big\rfloor?\)

Conditional Expectation

Let \(X\) and \(Y\) be discrete random variables. Then the expected value of \(X\) given the event \(Y=y\), denoted as \(\text{E}\left[X\mid Y=y\right]\), is

\[\text{E}\left[X\mid Y=y\right]=\sum\limits_{x}{xP(X=x \mid Y=y)}.\]

What is the expected number of "heads" flips in 5 flips of a fair coin given that the number of "heads" flips is greater than 2?

In this example, \(X\) is the number of "heads" flips in 5 flips of the coin, and \(Y=y\) is the event representing \(X>2\).

Recall the formula for conditional probability: \(\displaystyle P(X=x |Y=y)=\frac{P(X=x \cap Y=y)}{P(Y=y)}.\)

Thus the desired sum is

\[\text{E}[X\mid Y]=3\left(\frac{P(X=3)}{P(X>2)}\right)+4\left(\frac{P(X=4)}{P(X>2)}\right)+5\left(\frac{P(X=5)}{P(X>2)}\right).\]

These probabilities can be calculated using the binomial distribution:

\[\begin{align} P(X=3)&=\dbinom{5}{3}\left(\dfrac{1}{2}\right)^5=\dfrac{5}{16}\\ P(X=4)&=\dbinom{5}{4}\left(\dfrac{1}{2}\right)^5=\dfrac{5}{32}\\ P(X=5)&=\dbinom{5}{5}\left(\dfrac{1}{2}\right)^5=\dfrac{1}{32}\\ \Rightarrow P(X>2)&=P(X=3)+P(X=4)+P(X=5)=\dfrac{1}{2}. \end{align}\]

Substituting these values,

\[\text{E}[X\mid Y]=3(2)\left(\frac{5}{16}\right)+4(2)\left(\frac{5}{32}\right)+5(2)\left(\frac{1}{32}\right)=\frac{55}{16}.\]

The expected number of heads flips given that there are more than 2 heads flips is \(\dfrac{55}{16}=3.4375.\ _\square\)

Let \(X\) be a continuous random variable, and let \(f(x)\) be its density function. Let \(Y=y\) be an event, and let \(\chi\) be the range of \(X\) given \(Y=y\). Then the expected value of \(X\) given the event \(Y=y\), denoted as \(\text{E}\left[X\mid Y=y\right]\), is

\[\text{E}\left[X\mid Y=y\right]=\frac{{\Large\int}_{x\in \chi}{\ xf(x)\ dx}}{P(Y=y)}.\]

Let \(X\) be a continuous random variable with density function \(f(x)=2x\text{ , }0<x<1\).

What is \(\text{E}\left[X\mid X<\frac{1}{2}\right]?\)

In this example, the condition \(X<\frac{1}{2}\) is applied on the random variable \(X\). Subject to this condition, the range of \(X\) is \(0<x<\frac{1}{2}\).

The probability \(P\left(X<\frac{1}{2}\right)\) can be calculated with the same bounds:

\[\begin{align} P\left(X<\frac{1}{2}\right)&=\int_{0}^{\frac{1}{2}}{\ 2x\ dx} \\ &=x^2{\Large\mid}_{0}^{1/2} \\ &=\frac{1}{4}. \end{align} \]

Then the expected value of \(X\) given \(X<\frac{1}{2}\) is calculated using the formula above.

\[\begin{align} \text{E}\left[X\mid X<\frac{1}{2}\right]&=\frac{{\Large\int}_{0}^{1/2}{\ 2x^2\ dx}}{P\left(X<\frac{1}{2}\right)} \\ &= \frac{\frac{2}{3}x^3 {\Large\mid}_{0}^{1/2}}{\frac{1}{4}} \\ &= \frac{\frac{1}{12}-0}{\frac{1}{4}} \\ &= \frac{1}{3}. \end{align}\]

The expected value of \(X\) given \(X<\dfrac{1}{2}\) is \(\dfrac{1}{3}.\) \(_\square\)

Additional Worked Examples

There are 2 bags, and balls numbered 1 through 5 are placed in each bag. From each bag, 1 ball is removed. What is the expected value of the total of the two balls?

Consider the following table, which lists the possible values of the first ball in the first row, and the possible values of the second ball in the first column. Each entry in the table is obtained by finding the sum of these two values:

\[ \begin{array} {| c | c | c | c | c | c | } \hline & 1 & 2 & 3 & 4 & 5 \\ \hline 1 & 2 & 3 & 4 & 5 & 6\\ \hline 2 & 3 & 4 & 5 & 6 & 7 \\ \hline 3 & 4 & 5 & 6 & 7 & 8 \\ \hline 4 & 5 & 6 & 7 & 8 & 9 \\ \hline 5 & 6 & 7 & 8 & 9 & 10 \\ \hline \end{array} \]

Let \(X\) be the random variable denoting the sum of these values. Then, we can see that the probability distribution of \(X\) is given by the following table:

\[\begin{array} {| c | c | c | c | c | c | c | c | c | c |} \hline x & 2 & 3 & 4 & 5 & 6 & 7 & 8 & 9 & 10 \\ \hline P(X=x) &\frac{1}{25} & \frac{2}{25} & \frac{3}{25} & \frac{4}{25} & \frac{5}{25} & \frac{4}{25} & \frac{3}{25} & \frac{2}{25} & \frac{1}{25} \\ \hline \end{array} \]

As such, this allows us to calculate

\[ \begin{align} E[X] & = 2 \times \frac {1}{25} + 3 \times \frac {2}{25} + 4 \times \frac {3}{25} + 5 \times \frac {4}{25} + 6 \times \frac {5}{25} + 7 \times \frac {4}{25} + 8 \times \frac {3}{25} + 9 \times \frac {2}{25} + 10 \times \frac {1}{25} \\&= 6. \ _\square \end{align} \]

Note: How can we use the linearity of expectation to arrive at the result quickly?

\(n\) six-sided dice are rolled. What is the expected number of times \(5\) is rolled?

To determine the expected number of times 5 is rolled, we can define \(Y\) to be the random variable for the number of times a \(5\) is rolled, and \(Y_i\) to be the random variable for die \(i\) rolling a \(5\). It is easy to see that \(E(Y_i) = \frac{1}{6} \times 1 + \frac{5}{6} \times 0 = \frac{ 1}{6}.\) We have \(Y = \sum\limits_{i=1}^{n} Y_i\), so by the linearity of expectation, \(E(Y) = \sum\limits_{i=1}^{n} E(Y_i)\). Therefore, \(E(Y)= \frac{n}{6}\). \(_\square\)

Note: We can also answer this question by noting that since the probability of getting each number is equal, the expected number of times we get each number is the same, and the sum of these expectations is \(n\), so for each number the expectation is \(\frac{n}{6}\).

Mathematically speaking, let \( Z_i \) be the random variable for the number of times \(i\) is rolled out of \(n\) throws. By symmetry, we know that \( E[Z_i] \) is a constant. Since there are a total of \(n\) results, \( n = Z_1 + Z_2 + Z_3 + Z_4 + Z_5 + Z_6 \). This gives us

\[\begin{align} n

&= E[ Z_1 + Z_2 + Z_3 + Z_4 + Z_5 + Z_6 ] \\ &= E[Z_1] + E[Z_2]+ E[Z_3]+E[Z_4]+E[Z_5]+E[Z_6] \\ &= 6 E[Z_i]. \end{align} \]

Consider the Bernoulli process of a sequence of independent coin flips for a coin with probability of heads \(p\). Let \(X_i\) be a random variable with \(X_i=1\) if the \(i^\text{th}\) flip is heads and \(X_i=0\) if the \(i^\text{th}\) flip is tails. Let \(Y\) be a random variable indicating the number of trials until the first flip of heads in the sequence of coin flips. What is the expected value of \(Y?\)

The possible values for the number of coin flips until the first head are \(1, 2, 3, \ldots,\) so these are the possible values for random variable \(Y\). For \(i = 1,2, 3, \ldots\), the probability that \(Y=i\) is the probability that the first \(i-1\) trails are tails and trial \(i\) is heads. This gives the distribution \(p(Y = i) = (1-p)^{i-1}p,\) which is a geometrically distributed random variable. Then

\[ \begin{align} E[Y] &= \sum_{i=1}^\infty i (1-p)^{i-1}p\\ &= p \left(1 + 2(1-p) + 3(1-p)^2 + 4 (1-p)^3 + \cdots \right). \end{align}\]

Now for \(\lvert x \rvert < 1\), we have

\[1 + x + x^2 + x^3 + \cdots = \frac{1}{1-x}\]

and differentiating this gives

\[1 + 2x + 3x^2 + \cdots = \frac{1}{(1-x)^2}.\]

Then

\[\begin{align} E[Y] &= p \left(1 + 2(1-p) + 3(1-p)^2 + 4 (1-p)^3 + \cdots \right)\\ &= \frac{p}{\big(1-(1-p)\big)^2} = \frac{p}{p^2} = \frac{1}{p}. \ _\square \end{align}\]

Expected Value of a Dice Throw Experiment

A Dice throw experiment can have one outcome from the set \(\{1, 2, 3, 4, 5, 6\}\). Hence the random variable \(X \text{(= outcome of throwing a dice)}\) can have \(6\) values as follows:

\[\begin{array} &x_1 = 1, &x_2 = 2, &x_3 = 3, &x_4 = 4, &x_5 = 5, &x_6 = 6.\end{array}\]

For a fair dice, \(P(x_1) = P(x_2) = P(x_3) = P(x_4) = P(x_5) = P(x_6) = \frac{1}{6},\) implying

\[\begin{align} E(X) &= x_1 P(x_1) + x_2 P(x_2) + x_3 P(x_3)+ x_4 P(x_4)+ x_5 P(x_5)+ x_6 P(x_6)\\ &= \frac{1}{6} \times (1+2+3+4+5+6)\\ &= 3.5.\ _\square \end{align}\]

Note: Observe that the expected value of a variable \(X\) need not be one of the value taken by \(X\) as illustrated by this example.

Expected Value of numbers of times to throw a Fair Coin till you get a Heads

Let

\[\begin{align} X &=\text{ number of times to throw a coin till you get a heads}\\\\ \text{Events }&= \{H, TH, TTH, TTTH, TTTTH, \ldots\}. \end{align}\]

Then

\[\begin{align} X &= \{1, 2, 3, 4, 5, \ldots\}\\

P(X) &= \left\{\frac{1}{2}, \frac{1}{4}, \frac{1}{8}, \frac{1}{16}, \frac{1}{32}, \ldots\right\}. \end{align}\]Then we have \(E(X) = 1 \times \frac{1}{2} + 2 \times \frac{1}{4} + 3 \times \frac{1}{8} + 4\times \frac{1}{16} + 5 \times \frac{1}{32} + \cdots. \qquad (1)\)

Dividing both sides by \(2\) gives \(\frac{1}{2} E(X) = \frac{1}{4} + 2 \times \frac{1}{8} + 3 \times \frac{1}{16} + 4\times \frac{1}{32} + 5 \times \frac{1}{64} + \cdots. \qquad (2)\)Taking \((1)-(2),\) we have

\[\begin{align} \frac{1}{2} E(X) &= \frac{1}{2} + \frac{1}{4} + \frac{1}{8} + \frac{1}{16} + \frac{1}{32} + \frac{1}{64} + \cdots \\ &= \frac{\frac{1}{2}}{1- \frac{1}{2}}\\ &= 1 \\ \\ \Rightarrow E(X)&=2. \ _\square \end{align}\]

Problem Solving

The Las Vegas casino Magnicifecto was having difficulties attracting its hotel guests down to the casino floor. The empty casino prompted management to take drastic measures, and they decided to forgo the house cut. They decided to offer an “even value” game--whatever bet size the player places \((\)say \($A),\) there is a 50% chance that he will get \( +$A \), and a 50% chance that he will get \( - $A \). They felt that since the expected value of every game is 0, they should not be making or losing money in the long run.

The Las Vegas casino Magnicifecto was having difficulties attracting its hotel guests down to the casino floor. The empty casino prompted management to take drastic measures, and they decided to forgo the house cut. They decided to offer an “even value” game--whatever bet size the player places \((\)say \($A),\) there is a 50% chance that he will get \( +$A \), and a 50% chance that he will get \( - $A \). They felt that since the expected value of every game is 0, they should not be making or losing money in the long run.

Scrooge, who was on vacation, decided to exploit this even value game. He has an infinite bankroll (money) and decides to play the first round (entire series) in the following manner:

- He first makes a bet of \( $10 \).

- If he wins, he keeps his earnings and leaves.

- Each time that he loses, he doubles the size of his previous bet and plays again.

Now, what is the expected value of Scrooge’s (total) winnings from this first round (which ends when he leaves)?

A "round" refers to the entire series of games played above. This question refers to the entire round. There are 4 rounds in the set of problems.

This problem is part of Go Big Or Go Home, which explores the linearity of expected value.

Image credit: Wikipedia Flipchip

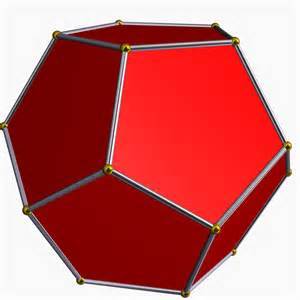

A bug starts on one vertex of a dodecahedron. Call it A. Define a second vertex adjacent to the one he starts on, and call it B.

Every second he randomly walks along one edge to another vertex. What is the expected value of the number of seconds it will take for him to reach the vertex B?

\(\)

Clarification: Every second he chooses randomly among the three edges available to him, including the one he might have just walked along. On his first move, he has a \(\frac13\) probability of reaching B.

See my other Expected Value quizzes.

Image credit: math.wikia.com

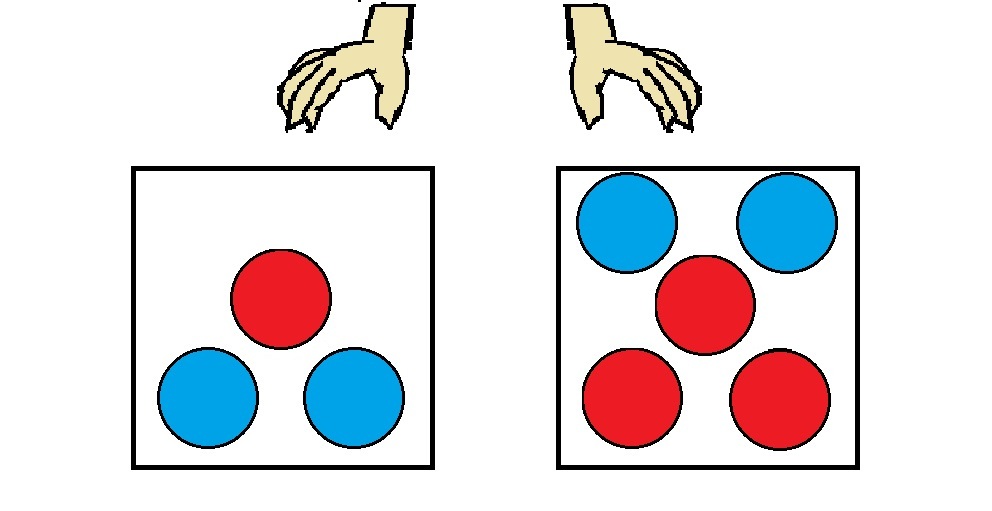

As shown above, the left box contains 1 red ball and 2 blue balls while the right box contains 2 blue balls and 3 red balls.

In a lucky draw game, you have to randomly and simultaneously pick up one ball from each box using both hands.

If you get a blue ball from the left box, you'll be rewarded 4 dollars, but you'll lose 5 dollars for the red draw. On the other hand, a red ball drawn from the right box will cost you 7 dollars while a blue draw will earn you 8 dollars.

What is the expected value of the bet earned from this game?

You flip a fair coin several times.

Find the expected value for the number of flips you'll need to make in order to see the pattern TXT, where T is tails, and X is either heads or tails.

If the expectation value can be expressed as \( \frac ab\), where \(a\) and \(b\) are coprime positive integers, find \(a+b\).

\(\)

Clarification: You are looking for the pattern TTT or THT. That is, you will keep flipping until you see one of these two patterns, and then count the number of flips you made.

Other Expected Value Quizzes

Image credit: www.cointalk.com

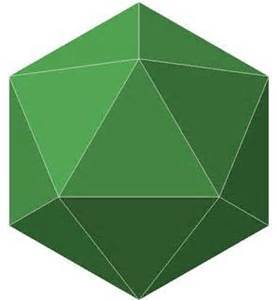

A bug starts on one vertex of an icosahedron. Every second he randomly walks along one edge to another vertex. What is the expected value of the number of seconds it will take for him to reach the vertex opposite to the original vertex he was on?

\(\)

Clarification: Every second he chooses randomly between the five edges available to him, including the one he might have just walked along.

Other Expected Value Quizzes

Image credit: www.kjmaclean.com.

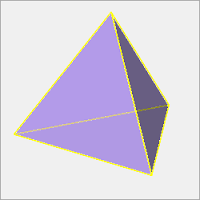

Call the vertices of a tetrahedron, A, B, C, and D.

A bug starts on vertex A. Every second he randomly walks along one edge to another vertex. What is the expected value of the number of seconds it will take for him to reach the vertex D?

\(\)

Clarification: Every second he chooses randomly among the three edges available to him, including the one he might have just walked along.

Other Expected Value Quizzes

Image credit: http://loki3.com/