Green's Functions in Physics

Green's functions are a device used to solve difficult ordinary and partial differential equations which may be unsolvable by other methods. The idea is to consider a differential equation such as

\[\frac{d^2 f (x) }{dx^2} + x^2 f(x) = 0 \implies \left(\frac{d^2}{dx^2} + x^2 \right) f(x) = 0 \implies \mathcal{L} f(x) = 0.\]

Above, the notation \(\mathcal{L} = \frac{d^2}{dx^2} + x^2\) is defined so that \(\mathcal{L}\) is a differential operator; a linear combination of derivative operators times functions. As above, the differential equation can be represented by such an operator acting on a function. The Green's function in this case is the analogue of the inverse of \(\mathcal{L}\):

\[G(x,y) \sim \mathcal{L}^{-1} \sim \left(\frac{d^2}{dx^2} + x^2 \right)^{-1}.\]

The idea is that the Green's function inverts the operator, so the inhomogeneous version of the above, \(\mathcal{L} f(x) = g(x)\), can be solved by the analogue of \(f(x) = G(x,y) g(x)\). The above correspondence in this case gives \(\mathcal{L}f(x) \sim \mathcal{L} G(x,y) g(x) \sim \mathcal{L} \mathcal{L}^{-1} g(x) = g(x)\). See below for the formal mathematics underlying this idea and why \(G(x,y)\) is a function of two variables.

The inverse of a derivative added to functions and so on is not a very well-defined object; rigorous mathematics is required to derive and justify a more precise construction. As a result, constructing and solving for Green's functions is a delicate and difficult procedure in general.

Green's functions are widely used in electrodynamics and quantum field theory, where the relevant differential operators are often difficult or impossible to solve exactly but can be solved perturbatively using Green's functions. In field theory contexts the Green's function is often called the propagator or two-point correlation function since it is related to the probability of measuring a field at one point given that it is sourced at a different point.

Contents

Definition of the Green's Function

Formally, a Green's function is the inverse of an arbitrary linear differential operator \(\mathcal{L}\). It is a function of two variables \(G(x,y)\) which satisfies the equation

\[\mathcal{L} G(x,y) = \delta (x-y)\]

with \(\delta (x-y)\) the Dirac delta function. This says that the Green's function is the solution to the differential equation with a forcing term given by a point source. Informally, the solution to the same differential equation with an arbitrary forcing term can be built up point by point by integrating the Green's function against the forcing term. This is equivalent to taking an uncountable superposition of solutions to the equation with point source and adding them up to the arbitrary forcing term, which is why the linearity of the differential operator is important. Formally, this means the solution to an arbitrary linear differential equation with forcing term \(\mathcal{L} u(x) = f(x)\) is given by

\[u(x) = \int G(x,y) f(y)\, dy,\]

since then

\[\mathcal{L} u(x) = \int \mathcal{L} G(x,y) f(y)\, dy = \int \delta(x-y) f(y)\, dy = f(x).\]

In general, Green's functions are not in fact functions but rather distributions, which means they can be integrated against functions. Although the resulting integrals may be difficult or impossible to compute, they provide an immediate solution to arbitrary linear differential equations when possibly no solution may be found by other methods, which can at the very least be computed numerically.

Below, several methods for constructing Green's functions are outlined. Which method is optimal is highly context-dependent.

Method of Direct Integration

As given above, the solution to an arbitrary linear differential equation can be written in terms of the Green's function via

\[u(x) = \int G(x,y) f(y)\, dy.\]

Since the Green's function solves

\[\mathcal{L} G(x,y) = \delta(x-y)\]

and the delta function vanishes outside the point \(x=y\), one method of constructing Green's functions is to instead solve the homogeneous linear differential equation \(\mathcal{L} G(x) = 0\) and impose the correct boundary conditions at \(x=y\) to account for a delta function.

This process can be written more formally as follows:

Below, the discussion is restricted to the special case of monic (leading coefficient unity) second-order linear differential operators for simplicity. First, write down the general form of the solutions on either side of \(x=y\):

\[G(x,y) = \begin{cases} c_1 G_1 (x) + c_2 G_2 (x) \quad & x<y \\ d_1 G_1 (x) + d_2 G_2 (x) \quad &x>y, \end{cases}\]

where \(c_1, c_2, d_1, d_2\) are constants and \(G_1, G_2\) are the two homogeneous solutions to the differential equation.

Next, impose the two boundary conditions. This fixes two of the constants \(c_1, c_2, d_1, d_2\) in terms of the other two.

Third, impose continuity of \(G(x,y)\) at \(x=y\). This fixes one of the two remaining constants.

Lastly, require that \(\frac{dG}{dx}\) increase by one at the delta function. This comes from integration of the original differential equation around a small window on either side of \(y\):

\[\Delta \left. \frac{dG}{dx} \right|_{x=y} = \int_{y-\epsilon}^{y+\epsilon} \frac {d^2 G}{dx^2} dx = \int_{y-\epsilon}^{y+\epsilon} \delta(x-y)\, dx =1.\]

The reason why \(\frac{d^2}{dx^2}\) alone is considered out of all the possible terms in \(\mathcal{L}\) is because solutions must be continuous at \(y\); any other terms in the differential operator do not change on either side of \(y\) when integrated.

This condition on the change in the derivative fixes the last constant and therefore solves for the Green's function.

Consider the \(E\)-component of an electromagnetic wave in a lasing cavity of length \(\ell.\) The waves are generated by a current \(J(x)\) that permeates the cavity and the walls are made by a perfectly reflective, conducting material, so \(E_z(0)=E_z(L)=0.\) Maxwell's equation for the \(E\)-component (polarized in the \(z\) direction) is \[\left(\frac{\partial^2}{\partial x^2} - k^2\right)E_z(x) = J(x)\]

where the constant \(k^2\) is equal to \(g\omega^2/c^2\) where \(c\) is the speed of light, \(\omega\) is the angular frequency of the light, and \(g\) is the gain coefficient, a number that describes the transfer of energy from the medium to the electromagnetic wave.

Find the general solution for \(E_z\) between the walls.

The big idea of Green's approach is that the entire current \(J(x)\) is contributing to the solution for the field and that we can split it up into little packets of current, represented by delta functions at all points \(y\) between the walls. If we can find the contribution to the field at \(x\) from one of these little packets at \(y,\) \(G(x,y)\), then we can just add them up to get the overall field: \(E_z(x) = \int dy\, G(x,y)J(y).\)

So, we start with

\[\dfrac{\partial^2}{\partial x^2}G(x,y) - k^2G(x,y) = \delta(x-y)\]

The \(\delta\)-function at \(y\) is a nasty bump that's going to cause the solution to have a discontinuous derivative as mentioned above. Just to see for ourselves, we can integrate this equation across \(y.\)

\[\begin{align} \int_{y-\varepsilon}^{y+\varepsilon} dx\, \dfrac{\partial^2G}{\partial x^2} &= \int_{y-\varepsilon}^{y+\varepsilon} dx\, \delta(x-y) + \int_{y-\varepsilon}^{y+\varepsilon} dx\, k^2 G \\ \dfrac{\partial G}{\partial x}\bigg|_{x=y+\varepsilon} - \dfrac{\partial G}{\partial x}\bigg|_{x=y-\varepsilon} &= 1 + k^2 \int_{y-\varepsilon}^{y+\varepsilon} dx\, G \end{align}\]

In the limit where \(\varepsilon\) goes to \(0,\) the integral on the right becomes zero since the integral of a continuous function over zero range is zero (\(G\) is not itself a \(\delta\)-function), and we're left with the promised unit jump in the value of the derivative at \(x=y.\) Only the second order term will contribute on the left hand side in this integral since everything else will be the integral of a continuous function with no range, so it becomes

\[\frac{\partial G}{\partial x}\bigg|_{x=y+\varepsilon} = 1 + \frac{\partial G}{\partial x}\bigg|_{x=y-\varepsilon}\]

Because of the jump discontinuity in the derivative of \(G,\) we expect distinct solutions on either side of \(x = y,\) where the \(\delta\)-function is \(0.\) The general solution of \(\partial_x^2G(x,y) - k^2G(x,y) = 0\) is \[A(y)e^{kx} + B(y)e^{-kx}.\] In particular, we'll write the left-hand (valid when \(x\) is to the left of \(y:\) \(x < y\)) and right-hand (valid when \(x\) is to the right of \(y:\) \(y<x\)) solutions like \[\begin{align} G_L(x,y) &= L_1(y)e^{kx} + L_2(y)e^{-kx} \\ G_R(x,y) &= R_1(y)e^{kx} + R_2(y)e^{-kx} \end{align}\]

Because the walls are reflective conductors, the field has to be zero at them: \(G(0,y) = G(\ell,y) = 0.\) This leads us to \(L_1(y) = -L_2(y)\) and \(R_2(y) = -e^{2k\ell}R_1(y).\)

This leads us to

\[\begin{align} G_L(x,y) &= L^\prime_1(y)\sinh{kx} \\ G_R(x,y) &= R_1(y)\left(e^{kx} - e^{2k\ell}e^{-kx}\right) \\ &= R_1^\prime(y)\sinh{k(x-\ell)} \end{align}\]

where we have pulled a factor of \(2e^{-kl}\) into \(R_1(y)\) and a factor of \(2\) into \(L_1(y).\) This still has two unknown coefficients \(L^\prime_1(y)\) and \(R^\prime_1(y).\) To find those, we have two more constraints that we can exploit:

- The solution has to be unique at \(x=y\) so \(G_L(y,y) = G_R(y,y).\)

- The derivative jumps by \(1\) at \(x=y,\) as we showed above.

In terms of the solutions, these constraints are:

\[\begin{align} L^\prime_1(y)\sinh{y} &= R_1^\prime(y)\sinh{k(y-\ell)} \\ kR_1^\prime(y)\cosh{k(y-\ell)} &= 1 + kL^\prime_1(y)\cosh{ky}. \end{align}\]

Solving the two equations leads to

\[\begin{align} L^\prime_1(y) &= \frac{1}{k}\dfrac{\sinh{k(y-\ell)}}{\sinh{k\ell}} \\ R^\prime_1(y) &= \frac{1}{k}\dfrac{\sinh{ky}}{\sinh{k\ell}}, \end{align}\]

so that

\[\begin{align} G_L(x,y) &= \frac{1}{k}\dfrac{\sinh{k(y-\ell)}}{\sinh{k\ell}}\sinh{kx} \\ G_R(x,y) &= \frac{1}{k}\dfrac{\sinh{ky}}{\sinh{k\ell}}\sinh{k(x-\ell)} \end{align}\]

Now, all we have to do is integrate the Green's function against the current function \(J:\) \[\begin{align} E_z(x) &= \int\limits_0^{\ell} dy\, G(x,y)J(y) \\ &= \int\limits_0^{x} dy\, G_R(x,y)J(y) + \int\limits_x^{\ell} dy\, G_L(x,y)J(y) \\ &= \int\limits_0^{x} dy\, \frac{1}{k}\dfrac{\sinh{ky}}{\sinh{k\ell}}\sinh{k(x-\ell)}J(y) + \int\limits_x^{\ell} dy\, \frac{1}{k}\dfrac{\sinh{k(y-\ell)}}{\sinh{k\ell}}\sinh{kx}J(y) \end{align}\]

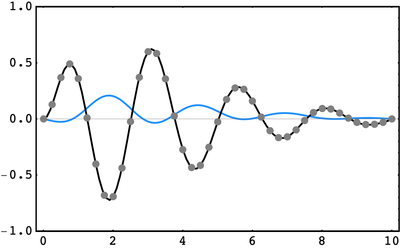

We can test this out on a few trial currents. In the plots below, the \(\color{gray}\text{gray}\) curve represents the current \(J(x),\) the \(\color{blue}\text{blue}\) curve represents the resulting field, and the \(\color{black}\text{black}\) points represent the expression \(\left(\frac{\partial^2}{\partial x^2} - k^2\right)G(x,y)\) which we expect to be equal to \(J(x).\)

In the first example, \(J(x) = xe^{-5x/\ell}\sin\frac{25 x}{\ell}\cos\frac{x}{\ell}\) and \(k=0.02:\)

In the second example, we use \(J(x) = \left(\ell - x\right)e^{-x^2/\ell^2}\) and \(k=0.6:\)

Consider electromagnetic waves polarized in the direction propagating in one dimension in an active laser medium with current density \(J(x)\). Maxwell's equation for the \(z\)-component of the electric field of these waves then reads

\[ \left(\dfrac{d^2}{dx^2} - \kappa^2 \right) E_z (x) = J(x)\]

for \(\kappa\) some constant. Suppose there is a source at \(x= 0\) and that there is a conducting mirror far away so that effectively \(E_z (0) = 1\) and \(E_z (\infty) = 0\) are the boundary conditions. Find the Green's function \(G(x,y)\) for \(x<y\) that will allow for determination of the electric field anywhere in space.

Method of Eigenvector Expansion

If one knows the spectrum of a differential operator, the Green's function may be easily computed via the formula

\[G(x,y) = \sum_n \frac{1}{\lambda_n} u_n (x) u_n^{\ast} (y),\]

where the \(\lambda_n\) are the eigenvalues corresponding to the normalized eigenfunctions \(u_n\) and the star denotes complex conjugate. This formula holds if the differential operator is a second-order differential operator of a special class called Sturm-Liouville operators in which all coefficient functions are continuous and the coefficient of the first-order term is differentiable (in general this condition can be extended to operators that are higher than second order, but these are not often physically motivated).

The motivation for this definition comes from thinking about solutions to the differential equation as being expanded in a basis of the eigenfunctions of the differential operator. It is straightforward to check that the above definition satisfies the criteria for a Green's function: consider the differential equation \(\mathcal{L} u(x) = f(x)\), then

\[ \begin{align} \mathcal{L} u(x) &= \int \mathcal{L} G(x,y) f(y)\, dy \\ &= \int \mathcal{L} \sum_n \frac{1}{\lambda_n} u_n (x) u_n^{\ast} (y) f(y)\, dy \\ &= \sum_n \frac{1}{\lambda_n} \mathcal{L} u_n (x) \int u_n^{\ast} (y) f(y)\, dy \\ &=\sum_n \frac{1}{\lambda_n} \lambda_n u_n (x) \int u_n^{\ast} (y) f(y)\, dy. \end{align} \]

Above, \(\mathcal{L} u_n (x)\) is replaced by \(\lambda_n u_n(x)\) since the \(u_n\) are the eigenfunctions of \(\mathcal{L}\). But now

\[ \mathcal{L} u(x) =\sum_n u_n (x) \int u_n^{\ast} (y) f(y)\, dy = \sum_n u_n (x) \langle u_n | f \rangle = f(x). \]

In the second equality we have emphasized that the integral is just the usual inner product of functions, so that the right-hand side above is really just \(f(x)\) expanded in a basis of eigenfunctions, so \(\mathcal{L} u(x) = f(x)\) as expected. Therefore, this expression for the Green's function solves the given differential equation.

In quantum mechanics, the equation of motion for the wavefunction of the quantum harmonic oscillator is the boundary-value eigenproblem in one dimension:

\[\left(\frac{d^2}{dx^2} - x^2 \right) \psi (x) = -E\psi (x)\]

with boundary conditions \(\psi (\pm \infty) = 0\) and \(E\) the energy of the particle in some system of appropriate units so that all relevant coefficients are unity. The allowed values of the energy are \(E =2n+1\) for \(n \in \{0,1,2,\ldots\}\); the corresponding orthonormal eigenvectors are

\[\psi_n (x) = \frac{1}{\sqrt{2^n n!}} \pi^{-1/4} e^{-x^2/2} H_n(x),\]

with \(H_n (x)\) the Hermite polynomials given by

\[H_n (x) = (-1)^n e^{x^2} \frac{d^n}{dx^n} \left(e^{-x^2}\right).\]

Find the exact solution to the quantum harmonic oscillator with the forcing term \((2x^2+4x+8)e^{-x^2/2}\) and boundary conditions \(\psi (\pm \infty) = 0\), that is, solve

\[\left(\frac{d^2}{dx^2} - x^2 \right) \psi (x) = (2x^2+4x+8)e^{-x^2/2}\]

for \(\psi (x)\) subject to these boundary conditions.

Write down the forcing term as a sum of eigenfunctions of \(\left(\frac{d^2}{dx^2} - x^2 \right)\). This requires the lowest three eigenfunctions:

\[ \begin{align} \psi_0 (x) &= \pi^{-1/4} e^{-x^2/2} \\ \psi_1 (x) &= \left(\frac{\pi}4\right)^{-1/4} 2xe^{-x^2/2}\\ \psi_2 (x) &= (4\pi)^{-1/4} \big(4x^2-2\big) e^{-x^2/2}. \end{align} \]

Therefore the forcing term can be written as

\[\big(2x^2+4x+8\big)e^{-x^2/2} = \frac12 (4\pi)^{1/4} \psi_2 (x) + 2 \left(\frac{\pi}4\right)^{1/4} \psi_1 (x) + 9 \pi^{1/4} \psi_0 (x).\]

Now consider the decomposition of the Green's function for the quantum harmonic oscillator as a sum over eigenvectors:

\[ \begin{align} G(x,y) &= \sum_n \frac{-1}{2n+1} \psi_n (x) \psi_n^{\ast} (y) \\ &= -\psi_0 (x) \psi_0 (y) - \frac{1}{3} \psi_1 (x) \psi_1 (y) - \frac{1}{5} \psi_2 (x) \psi_2 (y) + \cdots. \end{align} \]

Further terms are omitted because they will not be relevant: the eigenfunctions of the differential operator are orthonormal! Writing down the general solution in terms of the Green's function, one finds

\[ \begin{align} \psi(x) &= \int G(x,y) f(y)\, dy \\ &= -\psi_0 (x) \int \psi_0 (y) \left(9 \pi^{1/4} \psi_0 (y)\right) dy\\ &-\frac13 \psi_1 (x) \int \psi_1(y) \left(2 \Big(\frac{\pi}4\Big)^{1/4} \psi_1 (y) \right)dy \\ &- \frac15 \psi_2 (x) \int \psi_2 (y) \left(\frac12 (4\pi)^{1/4} \psi_2 (x)\right)dy. \end{align} \]

The orthogonality of the eigenfunctions ensures that all other integrals in the expansion vanish. The normalization of the eigenfunctions gives the final result:

\[ \begin{align} \psi(x) &=-9 \pi^{1/4} \psi_0 (x) - \frac23 \left(\frac{\pi}4\right)^{1/4} \psi_1 (x) - \frac{1}{10} (4\pi)^{1/4} \psi_2 (x) \\ &= \left(-9 - \frac43 x - \frac{1}{5} \big(2x^2-1\big)\right)e^{-x^2/2}. \end{align} \]

The solution satisfies the given boundary conditions, as it must do because all of the eigenfunctions satisfy the boundary conditions as well.

Which of the following is the Green's function \(G(x,y)\) for the time-dependent free-particle Schrödinger equation in one dimension?

The time-dependent free-particle Schrödinger equation in one dimension is

\[-\frac{\hbar^2}{2m} \frac{\partial^2 \psi}{\partial x^2} = i\hbar \frac{\partial \psi}{\partial t}.\]

Note: Recall that a solution to the time-dependent Schrödinger equation can be written out in a basis of solutions to the time-independent Schrödinger equation

\[\psi(x,t) = \sum_n c_n \phi_n (x) e^{-iE_n t /\hbar},\]

where \(E_n\) is the energy of the time-independent eigenfunction \(\phi_n (x)\).

Notations:

\( \exp(x) \) denotes the exponential function, \(\exp(x) = e^x \).

\( \text{abs}(x) \) denotes the absolute value function.

Method of Fourier Transform

A last common method of computing Green's functions is via contour integration and Cauchy's residue theorem.

Cauchy's Residue Theorem

The integral of an analytic function \(f(z)\) along a closed contour \(\gamma\) in the complex plane is given by

\[\oint_{\gamma} f(z) \,dz = 2\pi i \sum_{a_i \in \gamma} \text{Res}_{z = a_i} f(z),\]

where the sum is taken over all poles \(a_i\) contained inside the contour \(\gamma\). The residue of a simple pole \(a_i\), written \(\text{Res}_{z = a_i} f(z)\), is the value of the function evaluated with the simple pole removed.

Typically, the method works by first Fourier transforming the Green's function and applying the differential operator to the Fourier transform. The Fourier transform of the Green's function will usually contain simple poles. The inverse Fourier transform can then be computed via contour integration to obtain the Green's function in position space.

Find the Green's function for the one-dimensional, time-independent Schrödinger equation

\[\left(\frac{d^2}{dx^2} + k^2 \right) \psi (x) = \frac{2m}{\hbar^2} V(x) \psi(x),\]

with \(k^2 = \frac{2mE}{\hbar^2}\). Use it to construct the general solution to the Schrödinger equation for an arbitrary potential.

The Green's function satisfies

\[\left(\frac{d^2}{dx^2} + k^2 \right)G(x) = \delta (x),\]

where coordinates have been shifted so that \(y=0\) in the standard definition above. Take \(G(x)\) equal to the inverse Fourier transform of its Fourier transform \(g(s)\):

\[G(x) = \frac{1}{\sqrt{2\pi}} \int e^{isx} g(s)\, ds.\]

Recall also the integral identity for the Dirac delta function:

\[\delta (x) = \frac{1}{2\pi} \int e^{isx} ds.\]

Plugging into the equation for the Green's function, one finds

\[\frac{1}{\sqrt{2\pi}} \int \big(-s^2 + k^2\big) e^{isx} g(s)\, ds= \frac{1}{2\pi} \int e^{isx} ds,\]

so the Fourier transform of the Green's function can be read off:

\[g(s) = \frac{1}{\sqrt{2\pi}} \frac{1}{k^2 - s^2}.\]

Now the Green's function is defined by the inverse Fourier transform:

\[G(x) = \frac{1}{2\pi} \int \frac{e^{isx}}{(k+s)(k-s)} ds.\]

This integral can be performed by contour integration in the complex \(s\) plane. The integrand has two simple poles at \(s = \pm k\). The closed contour chosen is a semicircle in the upper or lower half-plane, the radius of which is taken to infinity. The choice of upper or lower half-plane depends on the sign of \(x\): it is desirable for the integral to vanish on the semicircular arc, so the choice of half-plane is taken so that the \(e^{isx}\) factor in the equation for the Green's function decays exponentially, vanishing as the radius is taken to infinity. As a result, the total contour integral is equal to the original real integral defining the Green's function. The choice of how to circumvent the poles is important and is discussed later. In this case, only one pole is enclosed by either contour and the choice of which pole depends on which half-plane the semicircle is closed in.

Choice of semicircular integration contours for \(x>0\) (left) and \(x<0\) (right)

For \(x>0\), the enclosed pole is at \(s=k\) and Cauchy's residue theorem yields

\[G(x) = -\frac{1}{2\pi} \oint \frac{e^{isx}}{k+s} \frac{1}{s-k} ds = -2\pi i \frac{1}{2\pi} \frac{e^{ikx}}{2k} = -i \frac{e^{ikx}}{2k} .\]

Performing the similar integration for \(x<0\) yields

\[G(x) = -i \frac{e^{-ikx}}{2k}.\]

Combining the two expressions, the final result for the Green's function of the one-dimensional Schrödinger equation is therefore

\[G(x) = -i \frac{e^{ik|x|}}{2k}.\]

The general solution to the Schrödinger equation for an arbitrary potential is therefore

\[ \begin{align} \psi (x) &= \psi_0 (x) + \frac{2m}{\hbar^2} \int G(x-y) V(y) \psi (y)\, dy \\ &= \psi_0 (x) - \frac{im}{\hbar^2 k} \int e^{ik|x-y|} V(y) \psi (y)\, dy \end{align} \]

with \(\psi_0 (x)\) a solution to the homogeneous equation.

When the contour integration was performed above, the choice of how to circumvent the poles was important. In quantum mechanics and quantum field theory, the Feynman prescription for avoiding the poles is used. This prescription represents the possibility of a particle measured at one point to be measured at a different point later or vice versa: since one pole is included in either choice of integration contour, either direction can occur. The resulting Green's function is often referred to as the Feynman propagator or Feynman Green's function.

However, this is not always the appropriate pole convention for a given physical setting. In electrodynamics, for instance, one is often concerned with finding the future propagation of an electromagnetic field given an initial configuration of sources. Fields radiate only from sources of charge; there is no backwards direction for propagation to run. As a result, a different pole convention is used, which circumvents both poles if \(x<0\) and includes both poles if \(x>0\), called the retarded pole convention. This results in a different Green's function called the retarded propagator or retarded Green's function, which is often the correct Green's function for questions in electromagnetism. Choosing the opposite pole convention which circumvents both poles if \(x>0\) and vice versa correspondingly gives the advanced propagator or advanced Green's function, which for example is useful in electromagnetism and field theory when one knows the state of a system near infinity and wants to derive the state at finite locations or times.

A more efficient, often effective shortcut for computing Green's functions is to take the relevant differential operator, replace each derivative with a factor of \(ik\), and then take the reciprocal. This immediately yields the Fourier transform of the Green's function to within a multiplicative factor. In quantum field theory, where the Fourier transform of the Green's function is often more immediately useful, this trick saves a lot of work.

A massive scalar field \(\phi (x,t)\) of mass \(m\) in quantum field theory satisfies the Klein-Gordon equation

\[\big(\Box + m^2\big) \phi(x,t) = 0,\]

where \(\Box\) indicates the d'Alembert wave operator:

\[\Box = -\dfrac{\partial^2}{\partial t^2} +\dfrac{\partial^2}{\partial x^2} + \dfrac{\partial^2}{\partial y^2} +\dfrac{\partial^2}{\partial z^2}. \]

Find the Green's function of the Klein-Gordon operator in momentum space by Fourier transform. Note that \(k = \big(E,\vec{k}\big)\) is a vector with four components: the energy of a particle, and its three components of spatial momentum.

Notation: \(\text{abs}(\cdot) \) denotes the absolute value function.

In quantum field theory, the Green's function corresponding to a particular field is represented by internal lines in Feynman diagrams. For instance, the photon in quantum field theory is represented by the field \(A_{\mu}\) which describes the electric and magnetic potentials of electromagnetism. The equations of motion of this field are Maxwell's equations, which can be represented in four-vector notation by

\[\big(g_{\mu \nu} \partial^2 - \partial_{\mu} \partial_{\nu}\big) A^{\mu} = j_{\nu}.\]

The corresponding Green's function in momentum space is simply

\[D_{\mu \nu} (k) = \frac{-i}{k^2} \left(g_{\mu \nu} - \frac{k_{\mu} k_{\nu}}{k^2} \right).\]

This corresponds to the factor which is included in the computation of Feynman diagrams for each photon line:

![The diagrammatic shorthand for the mathematics of particle scattering in quantum field theory, called Feynman diagrams [4]. The wavy line in the middle represents the Green's function for the photon equation of motion.](https://ds055uzetaobb.cloudfront.net/brioche/uploads/kJ8Rg9vf1F-feynman-diagram-ee-scattering.png?width=1200) The diagrammatic shorthand for the mathematics of particle scattering in quantum field theory, called Feynman diagrams [4]. The wavy line in the middle represents the Green's function for the photon equation of motion.

The diagrammatic shorthand for the mathematics of particle scattering in quantum field theory, called Feynman diagrams [4]. The wavy line in the middle represents the Green's function for the photon equation of motion.

References

[1] Lecture Notes by M.T. Homer Reid, MIT 18.305 Advanced Analytic Methods for Scientists and Engineers, Fall 2015, http://homerreid.dyndns.org/teaching/18.305/.

[2] D.V. Schroeder and M.E. Peskin. An Introduction to Quantum Field Theory. Westview Press, 1995.

[3] Griffiths, David J. Introduction to Quantum Mechanics. Second Edition. Pearson: Upper Saddle River, NJ, 2006.

[4] Image from https://en.wikipedia.org/wiki/Feynman_diagram#/media/File:Feynman-diagram-ee-scattering.png under Creative Commons licensing for reuse and modification.