Maximum Likelihood Estimation (MLE)

Maximum likelihood estimation (MLE) is a technique used for estimating the parameters of a given distribution, using some observed data. For example, if a population is known to follow a normal distribution but the mean and variance are unknown, MLE can be used to estimate them using a limited sample of the population, by finding particular values of the mean and variance so that the observation is the most likely result to have occurred.

MLE is useful in a variety of contexts, ranging from econometrics to MRIs to satellite imaging. It is also related to Bayesian statistics.

Contents

Formal definition

Let \(x_1, x_2, \ldots, x_n\) be observations from \(n\) independent and identically distributed random variables drawn from a Probability Distribution \(f_0\), where \(f_0\) is known to be from a family of distributions \(f\) that depend on some parameters \(\theta\). For example, \(f_0\) could be known to be from the family of normal distributions \(f\), which depend on parameters \(\sigma\) (standard deviation) and \(\mu\) (mean), and \(x_1, x_2, \ldots, x_n\) would be observations from \(f_0\).

The goal of MLE is to maximize the likelihood function:

\[L = f(x_1, x_2, \ldots, x_n | \theta)=f(x_1 | \theta) \times f(x_2 | \theta) \times \ldots \times f(x_n | \theta)\]

Often, the average log-likelihood function is easier to work with:

\[\hat{\ell} = \frac{1}{n}\log L = \frac{1}{n}\sum_{i=1}^n\log f(x_i|\theta)\]

There are several ways that MLE could end up working: it could discover parameters \(\theta\) in terms of the given observations, it could discover multiple parameters that maximize the likelihood function, it could discover that there is no maximum, or it could even discover that there is no closed form to the maximum and numerical analysis is necessary to find an MLE.

Though MLEs are not necessarily optimal (in the sense that there are other estimation algorithms that can achieve better results), it has several attractive properties, the most important of which is consistency: a sequence of MLEs (on an increasing number of observations) will converge to the true value of the parameters. The following is an example where the MLE might give a slightly poor result compared to other estimation algorithms:

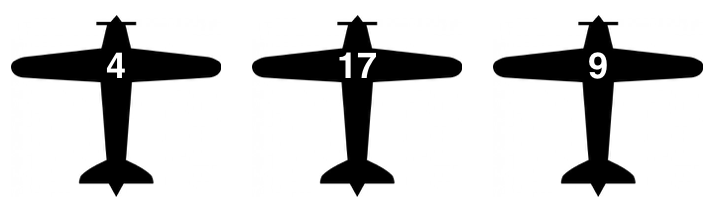

An airline has numbered their planes \(1,2,\ldots,N,\) and you observe the following 3 planes, which are randomly sampled from the \(N\) planes:

What is the maximum likelihood estimate for \(N?\) In other words, what value of \(N\) would, according to conditional probability, make your observation most likely?

Examples

The simplest case is when both the distribution and the parameter space (the possible values of the parameters) are discrete, meaning that there are a finite number of possibilities for each. In this case, the MLE can be determined by explicitly trying all possibilities.

A (possibly unfair) coin is flipped 100 times, and 61 heads are observed. The coin either has probability \(\frac{1}{3}, \frac{1}{2}\), or \(\frac{2}{3}\) of flipping a head each time it is flipped. Which of the three is the MLE?

Here, the distribution in question is the binomial distribution, with one parameter \(p\). Thus

\[\text{Pr}\left(H=61 | p=\frac{1}{3}\right) = \binom{100}{61}\left(\frac{1}{3}\right)^{61}\left(1-\frac{1}{3}\right)^{39} \approx 9.6 \times 10^{-9}\] \[\text{Pr}\left(H=61 | p=\frac{1}{2}\right) = \binom{100}{61}\left(\frac{1}{2}\right)^{61}\left(1-\frac{1}{2}\right)^{39} \approx 0.007\] \[\text{Pr}\left(H=61 | p=\frac{2}{3}\right) = \binom{100}{61}\left(\frac{2}{3}\right)^{61}\left(1-\frac{2}{3}\right)^{39} \approx .040\]

hence the MLE is \(p=\frac{2}{3}\).

Unfortunately, the parameter space is rarely discrete, and calculus is often necessary for a continuous parameter space. For instance,

A (possibly unfair) coin is flipped 100 times, and 61 heads are observed. What is the MLE when nothing is previously known about the coin?

Again, the binomial distribution is the model to be worked with, with a single parameter \(p\). The likelihood function is thus

\[\text{Pr}(H=61 | p) = \binom{100}{61}p^{61}(1-p)^{39}\]

to be maximized over \(0 \leq p \leq 1\). This can be achieved by analyzing the critical points of this function, which occurs when

\[ \begin{align*} \frac{d}{dp}\binom{100}{61}p^{61}(1-p)^{39} &= \binom{100}{61}\left(61p^{60}(1-p)^{39}-39p^{61}(1-p)^{38}\right) \\ &= \binom{100}{61}p^{60}(1-p)^{38}(61(1-p)-39p) \\ &= \binom{100}{61}p^{60}(1-p)^{38}(61-100p) \\ &= 0 \end{align*} \]

so either \(p=0, \frac{61}{100}\), or 1. Thus \(p=\frac{61}{100}\) is the MLE, as otherwise the likelihood function is 0.

This logic is easily generalized: if \(k\) of \(n\) binomial trials result in a head, then the MLE is given by \(\frac{k}{n}\).