Minimax

In game theory, minimax is a decision rule used to minimize the worst-case potential loss; in other words, a player considers all of the best opponent responses to his strategies, and selects the strategy such that the opponent's best strategy gives a payoff as large as possible.

The name "minimax" comes from minimizing the loss involved when the opponent selects the strategy that gives maximum loss, and is useful in analyzing the first player's decisions both when the players move sequentially and when the players move simultaneously. In the latter case, minimax may give a Nash equilibrium of the game if some additional conditions hold.

Minimax is also useful in combinatorial games, in which every position is assigned a payoff. The simplest example is assigning a "1" to a winning position and "-1" to a losing one, but as this is difficult to calculate for all but the simplest games, intermediate evaluations (specifically chosen for the game in question) are generally necessary. In this context, the goal of the first player is to maximize the evaluation of the position, and the goal of the second player is to minimize the evaluation of the position, so the minimax rule applies. This, in essence, is how computers approach games like chess and Go, though various computational improvements are possible to the "naive" implementation of minimax.

Formal definition

Suppose player \(i\) chooses strategy \(s_i\), and the remaining players choose the strategy profile \(s_{-i}\). If \(u_i(S)\) denotes the utility function for player \(i\) on strategy profile \(s\), the minimax of a game is defined as

\[\overline{u_i} = \text{min}_{s_{-i}}\text{max}_{s_i}u_i(s_i, s_{-i})\]

Intuitively speaking, the minimax (for player \(i\)) is one of two equivalent formulations:

- The minimax is the smallest value that the other players can force player \(i\) to receive, without knowing player \(i\)'s strategy

- The minimax is the largest value player \(i\) can guarantee when he is told the strategies of all other players.

Similarly, the maximin is defined as

\[\underline{u_i} = \text{max}_{s_i}\text{min}_{s_{-i}}u_i(s_i, s_{-i})\]

which can be intuitively understood as either of:

- The maximin is the largest value player \(i\) can guarantee when he does not know the strategies of any other player

- The maximin is the smallest value the other players can force player \(i\) to receive, while knowing player \(i\)'s strategy

For example, consider the following payoff matrix (the rows represent the first player's choices and the columns represent the second player's choices):

| 1 | 2 | 3 | |

| 1 | -1 | -2 | -1 |

| 2 | 2 | 2 | 1 |

| 3 | -1 | -1 | 0 |

where both players have a choice between three strategies. In such a payoff matrix, from the first player's perspective:

- The maximin is the largest of the smallest values in each row

- The minimax is the smallest of the largest values in each column

so the maximin is the largest of -2, 1, and -1 (i.e. 1), and the minimax is the smaller of 2, 2, and 1 (i.e. 1).

It is extremely important to note that

\[\underline{u_i} \leq \overline{u_i}\] i.e. the maximin is always at most the minimax.

This can be intuitively understood by noting that in minimax, player \(i\) effectively gets to choose his strategy after learning everyone else's, which can only increase his payoff.

In the above example, the maximin and the minimax are in fact equal. In such a case (which does not always happen!), the minimax strategy for both players gives a Nash equilibrium of the game.

This is especially important in zero-sum games, in which the minimax always gives a Nash equilibrium of the game, as the minimax and maximin are necessarily equal.

Minimax theorem

The minimax theorem establishes conditions on when the minimax and maximin of a function are equal. More precisely, the minimax theorem gives conditions on when

\[\text{max}_x\text{min}_yf(x,y)=\text{max}_y\text{min}_xf(x,y)\]

Formally,

Minimax theorem:

Let \(X,Y\) be two compact convex sets, and \(f: X \times Y \rightarrow \mathbb{R}\) be a continuous function on pairs \((x,y), x \in X, y \in Y\). If \(f\) is convex-concave, i.e.

- \(f(x,y):X \rightarrow \mathbb{R}\) is convex for all fixed \(y\)

- \(f(x,y):Y \rightarrow \mathbb{R}\) is concave for all fixed \(x\)

then

\[\text{max}_x\text{min}_yf(x,y)=\text{max}_y\text{min}_xf(x,y)\]

The application of the minimax theorem to zero-sum games is especially important, as it becomes equivalent to

For a zero-sum game with finitely many strategies, there exists a payoff \(P\) and a mixed strategy for each player such that

- Player 1 can achieve a payoff of at most \(P\), even given player 2's strategy

- Player 2 can achieve a payoff of at most \(-P\), even given player 1's strategy

which is equivalent to establishing a Nash equilibrium.

It is important to note that the minimax strategy may be mixed; in general,

It is not necessarily the case that the pure minimax strategy for each player leads to a Nash equilibrium.

For example, consider the payoff matrix

| 1 | 2 | 3 | |

| 1 | 3 | -2 | 2 |

| 2 | -1 | 0 | 4 |

| 3 | -4 | -3 | 1 |

The minimax choice for the first player is strategy 2, and the minimax choice for the second player is also strategy 2. But both players choosing strategy 2 does not lead to a Nash equilibrium; either player would choose to change their strategy given knowledge of the other's. In fact, the mixed minimax strategies of:

- Player 1 chooses strategy 1 with probability \(\frac{1}{6}\) and strategy 2 with probability \(\frac{5}{6}\)

- Player 2 chooses strategy 1 with probability \(\frac{1}{3}\) and strategy 2 with probability \(\frac{2}{3}\)

is stable and represents a Nash equilibrium.

In combinatorial games

In combinatorial games such as chess and Go, the minimax algorithm gives a method of selecting the next optimal move. Firstly, an evaluation function \(f:\mathbb{P} \rightarrow \mathbb{R}\) from the set of positions to real numbers is required, representing the payoff to the first player. For example, a chess position with evaluation +1.5 is significantly in favor of the first player, while a position with evaluation \(-\infty\) is a chess position in which White is checkmated. Once such a function is known, each player can apply the minimax principle to the tree of possible moves, thus selecting their next move by truncating the tree at some sufficiently deep point.

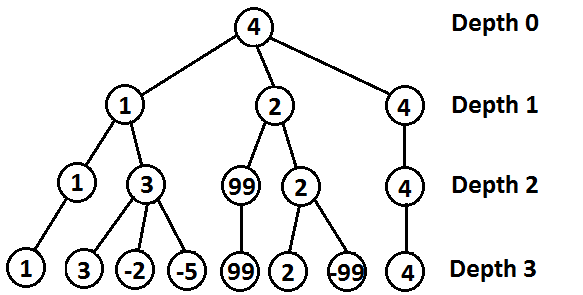

More specifically, given a tree of possible moves in which the leaves have been evaluated using the function \(f\), a player recursively assigns to each node an evaluation based on the following:

- If the node is at even depth, meaning that the first player is on move, the evaluation of the node is the maximum of the evaluations of its children.

- If the node is at odd depth, meaning that the second player is on move, the evaluate of the node is the minimum of the evaluations of its children.

For example, in the below tree the evaluations of the leaves are calculated first (with 99 and -99 representing a won/lost game respectively); this fills in the bottom row of the tree. At depth 2, the first player is on move, so he should select the move that maximizes the evaluation. This means that each evaluation in the depth 2 row is the maximum of the numbers in its subtree. At depth 1, the second player is on move, so he should select the move that minimizes the evaluation. This means that each evaluation in the depth 1 row is the minimum of the numbers in its subtree. Finally, at depth 0 the first player is on move, so he should select the move that maximizes the evaluation, giving an overall evaluation of 4.

Of course, games like chess and go are vastly more complicated, as dozens of moves are possible at any possible point (rather than the 1-3 in the above example). Thus it is infeasible to completely solve these games using a minimax algorithm, meaning that the evaluation function is used at a sufficiently deep point in the tree (for example, most modern chess engines apply a depth of somewhere between 16 and 18) and minimax is used to fill in the rest of this relatively small tree.