Central Limit Theorem

The central limit theorem is a theorem about independent random variables, which says roughly that the probability distribution of the average of independent random variables will converge to a normal distribution, as the number of observations increases. The somewhat surprising strength of the theorem is that (under certain natural conditions) there is essentially no assumption on the probability distribution of the variables themselves; the theorem remains true no matter what the individual probability distributions are.

Let \( X_i \) be the random variable obtained by rolling a fair die and recording

\[ X_i = \begin{cases} 1&\text{ if the die shows 5 or 6}\\0&\text{ if the die shows 1, 2, 3, or 4}. \end{cases} \]

Then \( X_i\) is not normally distributed; it has a discrete probability density function, with expected value \( \frac13\) and variance \( \frac29.\) The central limit theorem says that the distribution of \( \frac{X_1+X_2+\cdots+X_n}{n}\) for large \(n\) is very close to a normal distribution, with expected value \( \frac13\) and variance \( \frac2{9n}.\)

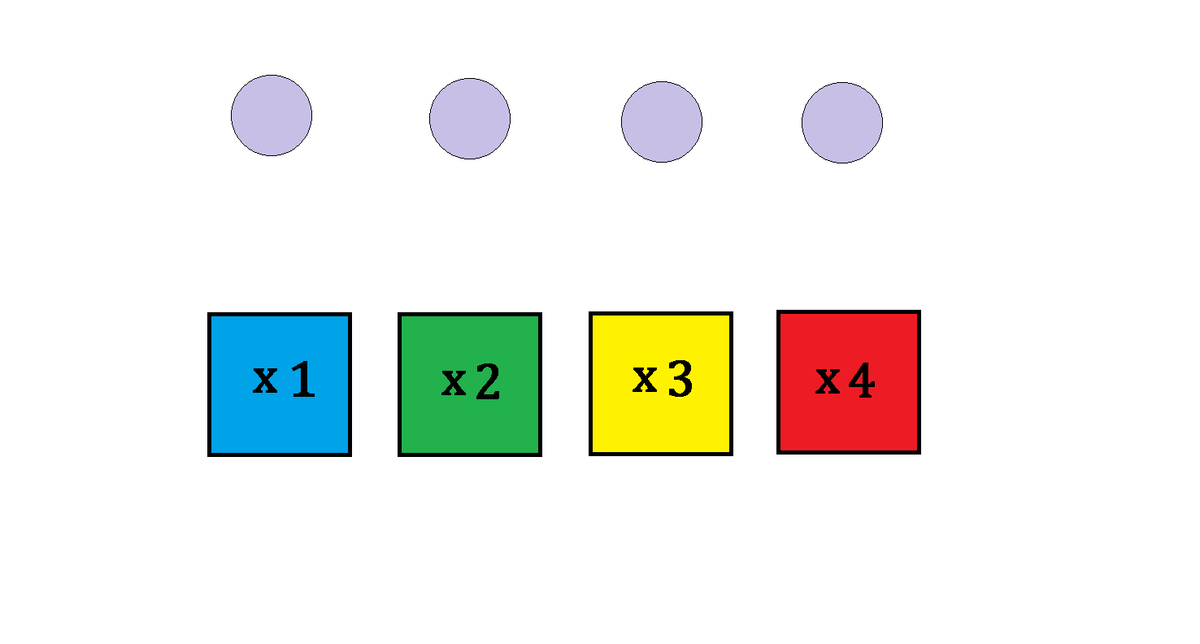

You're in a game show called "Magic Box," where you're given 4 identical balls to throw into \(4\) boxes randomly. Each box will earn you the point(s) written on it multiplied by the number of balls thrown into it. For example, if you put one ball into each box, you'll get \(1+2+3+4 =10\) points.

What is the expected value of points earned from this game?

In plain language, given a population of any distribution with mean \( \mu\) and variance \(\sigma^2,\) the sampling distribution of the mean approaches a normal distribution with mean \(\mu\) and variance \(\frac{\sigma^2}n.\)

Contents

Formal Statement of the Theorem

The central limit theorem most often applies to a situation in which the variables being averaged have identical probability distribution functions, so the distribution in question is an average measurement over a large number of trials--for example, flipping a coin, rolling a die, or observing the output of a random number generator. There are generalizations of the theorem to other situations, but this wiki will concentrate on the standard applications.

First, the formal statement requires a definition of "converging in distribution" which formalizes the qualitative behavior that the averages get closer and closer to the normal distribution as \(n\) increases:

A sequence of random variables \( Y_n\) converges in distribution to a random variable \( Z\) if

\[\lim_{n\to\infty} P(Y_n \le x) = P(Z \le x),\]

for any real number \(x\) at which the function \( P(Z \le x)\) is continuous.

Classical Central Limit Theorem

Let \(X_i\) be independent, identically distributed ("i.i.d.") random variables with \( E[X_i] = \mu\) and \( \text{var}[X_i] = \sigma^2.\) Let \( S_n = \frac{X_1+X_2+\cdots+X_n}{n}.\) Then the variables \( \sqrt{n}(S_n-\mu)\) converge in distribution to the normal distribution with mean \( 0\) and variance \( \sigma^2.\)

Using the Theorem to Estimate Probabilities

I roll a fair die \(450\) times. Estimate the probability that at least \( 160\) of the rolls show a \(5\) or \(6.\)

For the \( X_i\) in the introduction, \(\mu = \frac13\) and \( \sigma^2 = \frac29,\) so \( \sqrt{n}\big(S_n-\frac13\big)\) converges in distribution to the normal distribution with mean \(0\) and variance \(\frac29.\) For large \(n,\) \( \sqrt{n}S_n\) should be very nearly normal with mean \(\frac{\sqrt{n}}3\) and variance \( \frac29.\) Multiplying by \(\sqrt{n}\) gives that \( nS_n\) should be roughly normal with mean \( \frac{n}3\) and variance \( \frac{2n}9.\)

Note that \( nS_n\) is the sum of the \( X_i,\) or the number of dice rolls showing a \(5\) or \(6.\) For \(n=450,\) the mean is \(150\) and the variance is roughly \(100,\) so a value of \( 160\) or more is one standard deviation to the right of the mean. Since roughly 68% of the area under the normal curve lies within one standard deviation of the mean, the answer is \( \frac{100-68}2 = 16\) percent. \(_\square\)

It was not necessary to manipulate the variables; we could have worked with \(\sqrt{n}\big(S_n-\frac13\big)\) instead of \(nS_n.\) The relevant computation would be

\[\sqrt{450}\left(\frac{160}{450}-\frac13 \right) - \sqrt{450}\left(\frac{150}{450}-\frac13 \right) = \frac{\sqrt{2}}3,\]

which is exactly one standard deviation for a normal distribution with variance \( \frac29.\) But it is often more natural to work with the sum instead of the normalized average.

Note also that the facts about the mean and the average stated in the theorem are elementary, as long as the variables are independent: the mean of the average is the average of the means, and the variance of the average is the average of the variances. The above example required the central limit theorem only in order to get a good estimate for the probability that the sum would exceed its mean by one standard deviation; the central limit theorem gives an assurance that using the relevant estimate for a normally distributed variable will be roughly accurate.

Continuity Correction

For finer approximations involving discrete variables, the standard convention is to employ a continuity correction involving adjusting the bounds of the normally distributed limit variable by half of a unit. For instance, in the example in the previous section, the estimate that the dice showed at least \( 160\) \(5\)s and \(6\)s used \(P(Y_{450} \ge 160),\) where \( Y_{450} \) was the count of \(5\)s and \(6\)s, and using an approximation of \( Y_{450}\) as a continuous normally distributed variable.

But in fact \( Y_{450} \) is discrete; its values are always integers. If instead we note that the amount also equals \( P(Y_{450} \ge 159.1),\) or \( P(Y_{450} \ge 159.9),\) we get (slightly) different answers when approximating \( Y_{450} \) by a continuous variable. The solution is to adjust by \( 0.5,\) or half of the minimum increment of \( Y_{450},\) which tends to give the most accurate approximation in real-world situations. So the most accurate answer to the above exercise would use \( P(Y_{450} \ge 159.5),\) which necessitates a computation using the normal distribution for \( z=0.95\) instead of \(z=1.\) This gives a value of \( 17.11\%\) instead of \(15.87\%,\) which is closer to the correct answer.

You have an (extremely) biased coin that shows heads with probability \( 99 \% \) and tails with probability \( 1 \% \).

If you toss it \( 10000 = 10^ { 4 } \) times, what is the probability that less than \( 100 \) tails show up, to three significant figures? Make use of a normal distribution as an approximation to solve this problem.

Note: The case of \( 100 \) tails is not to be included in the probability.

This problem is part of the set Extremely Biased Coins.

Applications to Sampling

The central limit theorem can be used to answer questions about sampling procedures. It can be used in reverse, to approximate the size of a sample given the desired probability; and it can be used to examine and evaluate assumptions about the initial variables \( X_i.\)

A scientist discovers a potentially harmful compound present in human blood. A study shows that the distribution of levels of the compound among adult men has a mean value of \( 13 \text{ mg}/\text{dl},\) with standard deviation \(4 \text{ mg}/\text{dl}.\) The scientist wishes to take a sample of adult men for another study. How many men must she sample so that the probability that the mean value of the level of the compound in her sample is between \( 12 \) and \( 14 \text{ mg}/\text{dl}\) is at least \( 98\%?\)

The sampling average will have mean \( 13 \) and variance \( \frac{16}n,\) where \(n\) is the number of samples. A \(98\%\) probability requires us to be within \( 2.33\) standard deviations of the mean, for a normal distribution. We want this to be \( 1\text{ mg}/\text{dl},\) so \( 1\) standard deviation should be \( \frac1{2.33},\) and hence the variance is \( \frac{1}{(2.33)^2}.\) This gives

\[ \begin{align} \frac{16}n &= \frac1{(2.33)^2} \\ n &= 16(2.33)^2 \approx 86.9. \end{align} \]

So the sample should have at least \( 87\) men. \(_\square\)

A coin is tossed 200 times. It comes up heads 120 times. Is the coin fair?

The central limit theorem says that the number of heads is approximately normally distributed, with mean \( 100\) and variance \(50.\) Two standard deviations above the mean is \(100+2\sqrt{50} \approx 114.1.\) So this is nearly a 3-sigma event. The probability that the coin comes up heads at least 120 times is approximately \(0.29\%\) \(\big(\)which comes from looking up \(\frac{19.5}{\sqrt{50}} \approx 2.76\) in a \(z\)-table of values of the function \(P(X \ge x)\) for a normally distributed variable \(X\big).\) At a confidence level of \(5\%\) or even \(1\%,\) we reject the null hypothesis that the coin is fair. \(_\square\)